Vision-Aided Blockage Prediction

Leaderboard

| Date | Name | scenario 17 | scenario 18 | scenario 19 | scenario 20 | scenario 21 | scenario 22 |

|---|---|---|---|---|---|---|---|

| 1/15/2021 | Wireless Intelligence Lab ASU | Future-1: 88.64% Future-5: 89.55% Future-10: 85.42% | Future-1: 86.96% Future-5: 88.26% Future-10: 88.46% | Future-1: 89.47% Future-5: 89.30% Future-10: 87.11% | Future-1: 90.74% Future-5: 87.04% Future-10: 80.59% | Future-1: 92.86% Future-5: 88.57% Future-10: 80.30% | Future-1: 83.30% Future-5: 83.33% Future-10: 70.11% |

- This table documents the different proposed vision-aided blockage prediction solutions. This provides a way to benchmark the performance of the proposed solutions.

- For the individual DeepSense scenarios (development datasets), we use the “Future-N” blockage prediction accuracy as the evaluation metric.

- For further details and information regarding the ML challenge and how to participate, please check the “ML Challenge“ section below.

License

A. Alkhateeb, G. Charan, T. Osman, A. Hredzak, J. Morais, U. Demirhan, and N. Srinivas, “DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Datasets,” IEEE Communications Magazine, 2023.

@Article{DeepSense,

author={Alkhateeb, Ahmed and Charan, Gouranga and Osman, Tawfik and Hredzak, Andrew and Morais, Joao and Demirhan, Umut and Srinivas, Nikhil},

title={DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Dataset},

journal={IEEE Communications Magazine},

year={2023},

publisher={IEEE}}

G. Charan and A. Alkhateeb, “Computer Vision Aided Blockage Prediction in Real-World Millimeter Wave Deployments,” 2022 IEEE Global Communications Conference (GLOBECOM), 2022.

@Article{charan2022

title={Computer vision aided blockage prediction in real-world millimeter wave deployments},

author={Charan, Gouranga and Alkhateeb, Ahmed},

booktitle={2022 IEEE Globecom Workshops (GC Wkshps)},

pages={1711–1716},

year={2022},

organization={IEEE}}

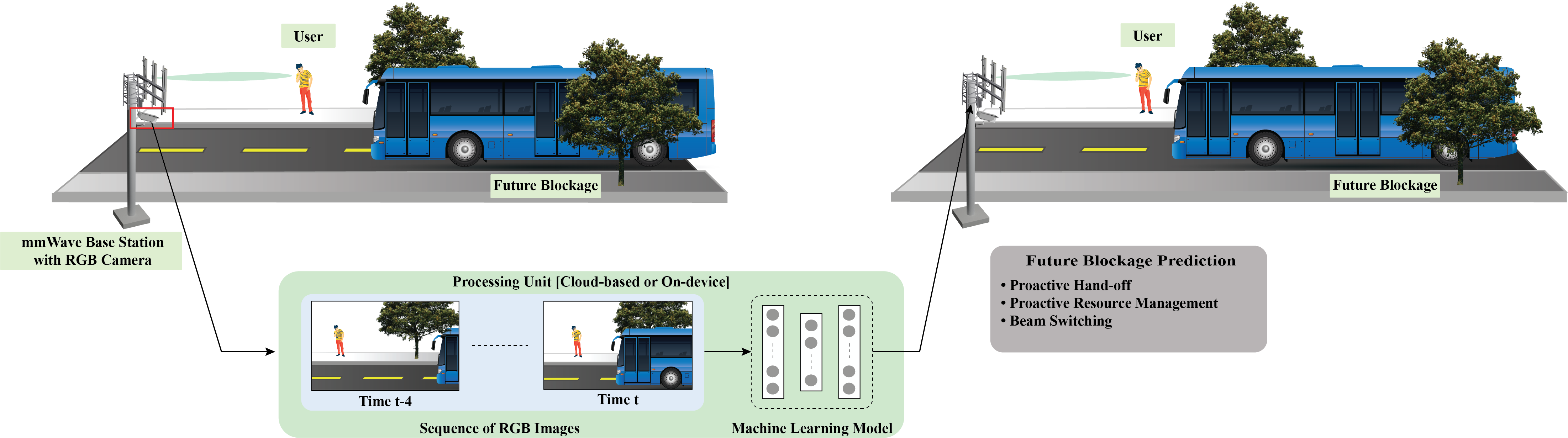

Introduction

LOS link blockage is a challenge: Millimeter-wave (mmWave) and sub-terahertz communications are becoming the dominant directions for modern and future wireless networks. With their large bandwidth, they have the ability to satisfy the high data rate demands of several applications such as wireless Virtual/Augmented Reality (VR/AR) and autonomous driving. Communication in these bands, however, faces several challenges at both the physical and network layers. One of the key challenges stems from the sensitivity of mmWave and terahertz signal propagation to blockages. For this, these systems need to rely heavily on maintaining line-of-sight (LOS) connections between the base stations and users. The possibility of blocking these LOS links by stationary or dynamic blockages can highly affect the reliability and latency of mmWave/terahertz systems, which makes it hard for these systems to support highly-mobile and latency-sensitive applications.

Sensing aided blockage prediction is a promising solution: The key to overcoming the link blockage challenges lies in developing a critical sense of the surrounding. The dependence of mmWave/sub-THz communication systems on the line-of-sight links between the transmitter/receiver means that the awareness about their locations and the surrounding environment (geometry of the buildings, moving scatterers, etc.) could potentially help in predicting future blockages. For example, the sensory data collected from RGB cameras, LiDARs, Radars and GPS data can help in identifying probable transmitter and blockages in the wireless environment and understand their mobility patterns. These information can be utilized by the wireless network to proactively predict incoming blockages and in initiating hand-off beforehand. We call this approach sensing-aided blockage prediction and hand-off. Vision-aided blockage prediction is a special case when the basestation attempts to leverage visual data of the wireless environment, captured by RGB cameras, to predict the future LOS link blockages proactively.

Vision-aided blockage prediction: Specific Task Description

Vision-aided blockage prediction at the infrastructure is the task of predicting the future LOS link blockages proactively by utilizing a machine learning model and the images of the wireless environment captured by the camera installed at the basestation.

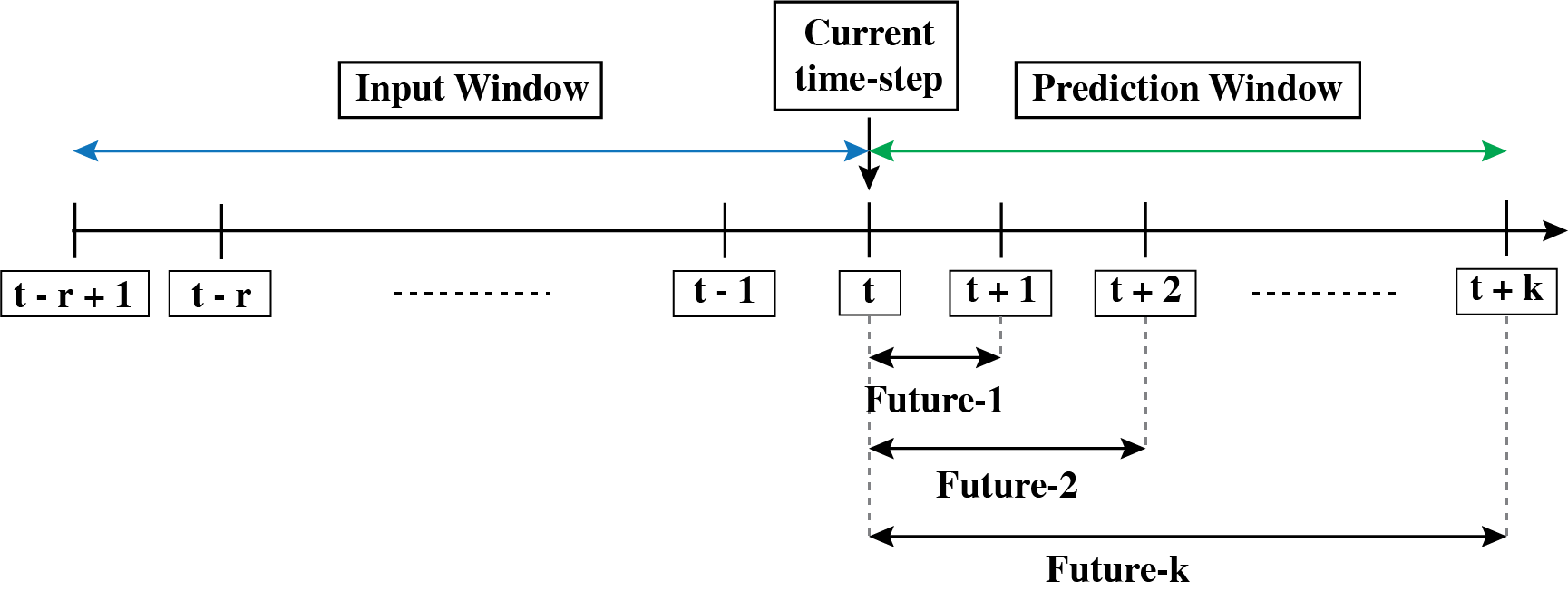

Objective of the ML Task: At any time t, given a sequence of ‘r’ {t – r + 1, … , t} previous and current images of the wireless environment captured (by the basestation), the primary objective of this task is to design a machine learning model that predicts the future link blockages. In general, the machine learning model is expected to return the blockage status in the future ‘k’ time-slots, i.e., {t + 1, … , t + k}. If there is any blockage during these slots, the blockage status for the ‘k’ time-slots (future-k) is considered as blocked. For ML model development, we provide a labeled dataset consisting of ordered sequence of RGB images (input to the ML model) and the ground-truth future blockage status. More details regarding the dataset is provided in the Dataset section below.

For further information regarding how vision can aid the beam prediction task, please refer to our paper here.

Task-Specific Dataset

DeepSense 6G: Developing efficient solutions for sensing-aided blockage prediction and accurately evaluating them requires the availability of a large-scale real-world dataset. With this motivation, we built the DeepSense 6G dataset, the first large-scale real-world multi-modal dataset with co-existing communication and sensing data.

In this vision-aided blockage prediction task, we build development/challenge datasets based on the DeepSense data from scenarios [17 – 22].

For each scenario, we provide the following datasets:

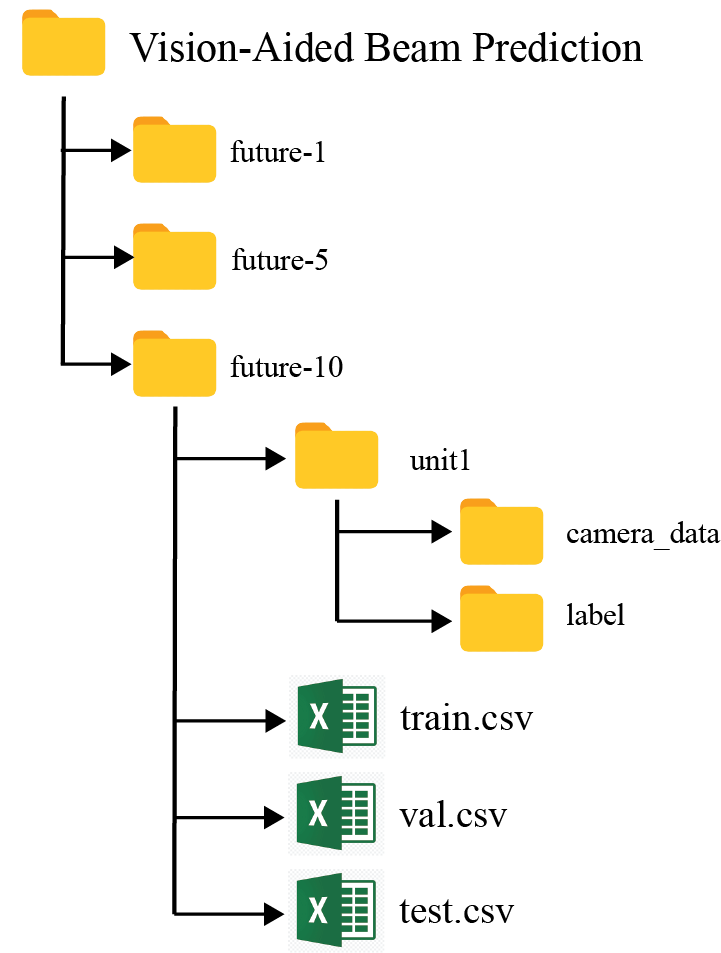

- Development Dataset: It comprises of the sequence of visual data and the corresponding ground-truth blockage status for k-future instances. The visual data comprises of the RGB images of the wireless environment captured by the camera installed at the basestation. This task involves predicting for three different future instances, i.e., future-1, future-5, and future-10.

- Challenge Dataset: To motivate the development of efficient ML models, we propose a benchmark challenge. For this, we provide a Challenge dataset, consisting of only the input RGB image sequences. The ground-truth labels are hidden from the users by design to promote a fair benchmarking process. To participate in this Challenge, check the ML Challenge section below for further details.

Below we explain how to access the development dataset.

Please login to download the DeepSense datasets

How to Access Task Data?

Step 1. Download All Scenarios Dataset

Step 2. Extract the VABT.zip file. Contains the scenario dataset folders

Each scenario folder consists of following files:

- unit1: Includes the visual (RGB images) and corresponding blockage labels

- train.csv

- val.csv

- test.csv

What does each CSV file contain?

We provide the sequence of visual data and the corresponding future link blockage status. An example of 5 data samples in shown below.

Individual Download Links

To download the individual scenarios, follow the scenario-wise links provided below.

Please login to download the DeepSense datasets

ML Challenge: Vision-Aided Blockage Prediction

To advance the state-of-the-art in the vision-aided blockage prediction task, we propose a benchmark challenge based on the DeepSense real-world dataset. The objective of the task is to develop a machine learning-based model that takes the sequence of images captured at the basestation as the input and predicts the future link blockage status.

This challenge adopts the labeled development dataset described above with RGB images and the corresponding link status.

Participation Steps

Step 1. Getting started: First, we recommend the following:

- Get familiarized with the data collection testbed and the different sensor modalities presented here

- Next, in the Tutorials page, we have provided Python-based codes to load and visualize the different data modalities

Step 4. Submission: After you develop your ML model, you are invited to submit your results at submission@DeepSense6G.net. Please find the submission process and evaluation criteria below.

Submission Process

We define a standardized beam prediction result format that serves as an input to our evaluation code. There are 5 different real-world DeepSense 6G scenarios in this challenge. For each scenario, please submit the following:

- Each scenario has three sub-dataset, each corresponding to one of the three future prediction window length, i.e., future-1, future-5 and future-10. The users must submit the predicted link blockage status for each sub-dataset in the Challenge set. An sample submission csv file is shown below.

- Every submission should provide their pre-trained models, evaluation code and ReadMe file documenting the requirements to run the code.

| sample_index | future-1 |

|---|---|

| 1 | 1 |

| 2 | 0 |

| 3 | 0 |

| 4 | 1 |

| 5 | 0 |

Evaluation

- The evaluation metric adopted in this challenge is the prediction accuracy.

- The evaluation is done based on the ML challenge (hidden) test set, which is used for benchmarking purposes.

Leaderboard Rules

- In order to participate in this challenge, please submit your results following the submission process above

- Further, to be ranked in the Leaderboard table, contestants need to submit the challenge set results for all the 6 scenarios and for all three future prediction windows.