Data Collection

DeepSense Testbeds

- Testbed 1

- Testbed 2

- Testbed 3

- Testbed 4

- Testbed 5

- Testbed 6

- Testbed 7

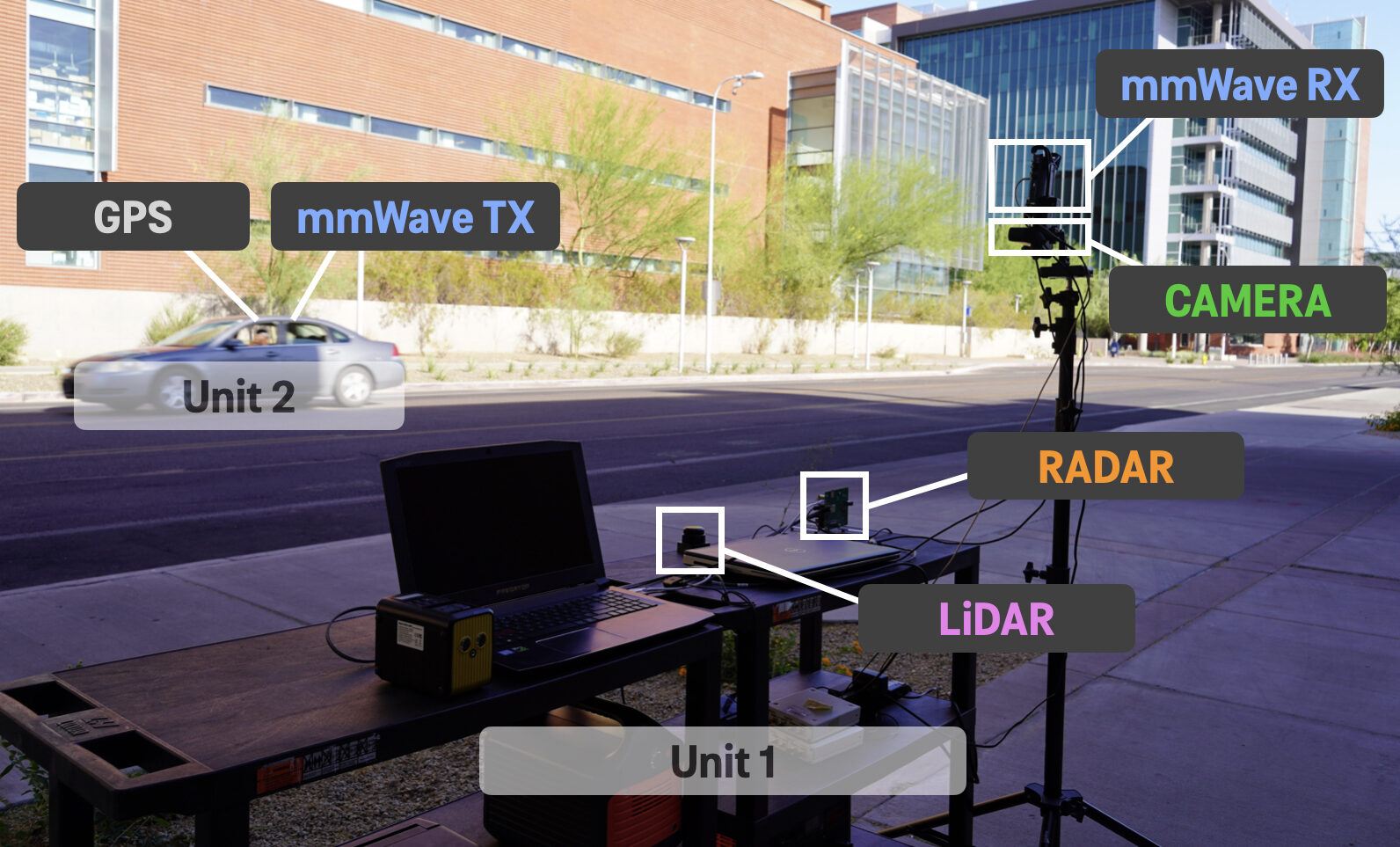

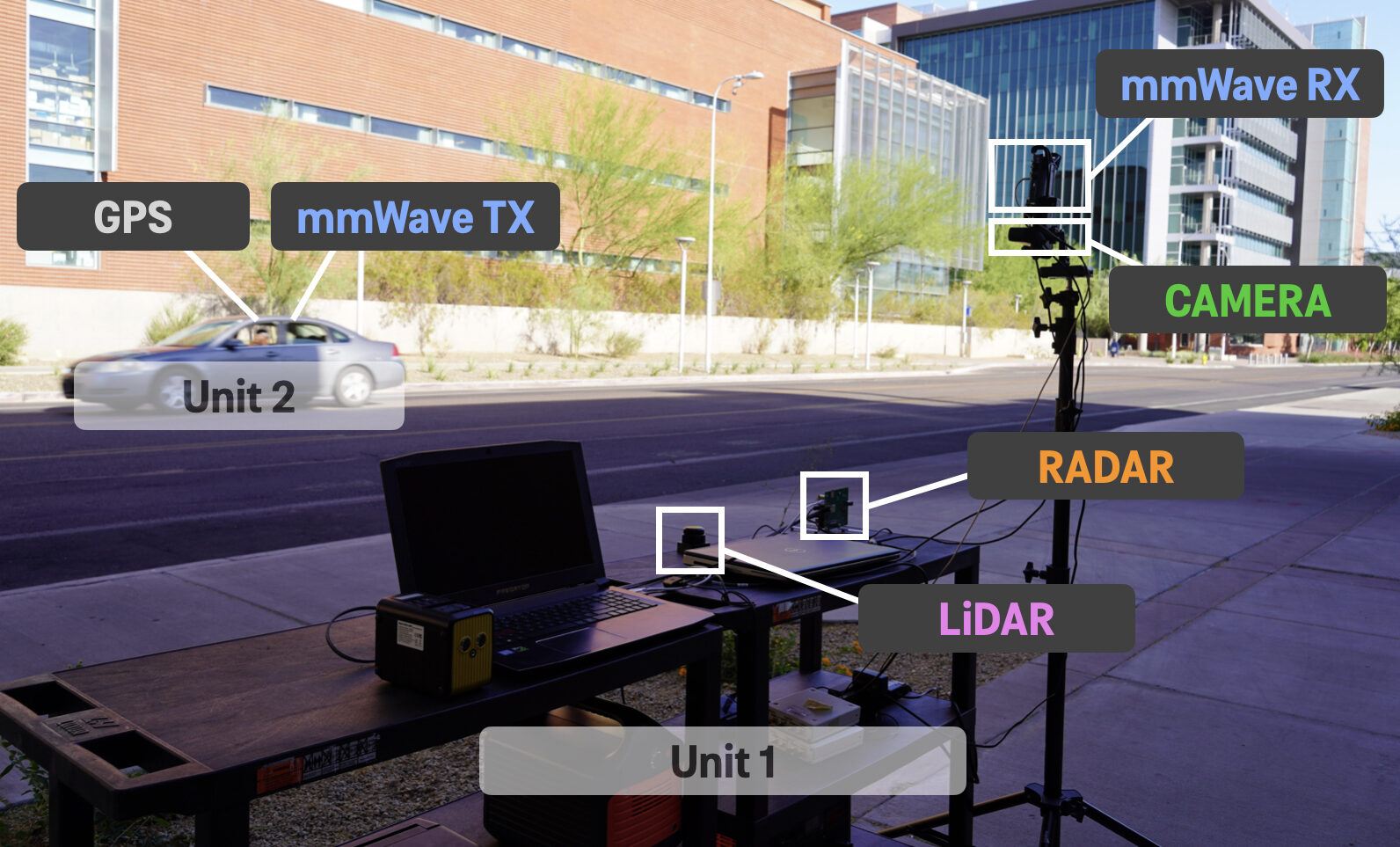

This testbed emulates a vehicle to infrastructure communication scenario with two units: Unit 1 (a stationary basestation unit with radar, LiDAR, camera, GPS sensors and a mmWave phased array receiver) and Unit 2 (a vehicle with GPS receiver and mmWave omni-directional transmitter)

Hardware Elements at Unit 1

RGB Camera

1100 FoV – 30 FPS – Wide Angle – f/1.8 Aperture

Output: RGB images of dimensions 960 x 540

mmWave receiver

60GHz phased array – 16-element ULA – 64-beam codebook

You can check and plot the exact (measured) beam patterns using this link:

Output: 64-element vector with the receiver power at every beam

Radar

FMCW radar with 3 Tx & 4 Rx antennas – fully-digital reception– 10 FPS – frequency range 76- to 81 GHz with 4 GHz bandwidth – Max. range ~ 100 m – Range resolution of 60 cm

Output: 3D complex I/Q radar measurements of (number of Rx antenna) x (samples per chirp) x (chirps per frame) : 4x256x128

LiDAR

Range: 40 m – 10 FPS – Resolution of 3 cm – FoV of 3600 – Accuracy ~ 5 cm – Wavelength ~ 905 nm

Output: 360-degree point cloud sampling data

Hardware Elements at Unit 2

GPS-RTK

10 measurements per second – Horizontal positional accuracy ~ 2.5m without RTK fix and ~ 10cm with RTK fix

output: Latitude and Longitude among other values

mmWave Transmitter

60GHz – Omni transmission (always oriented towards the receiver)

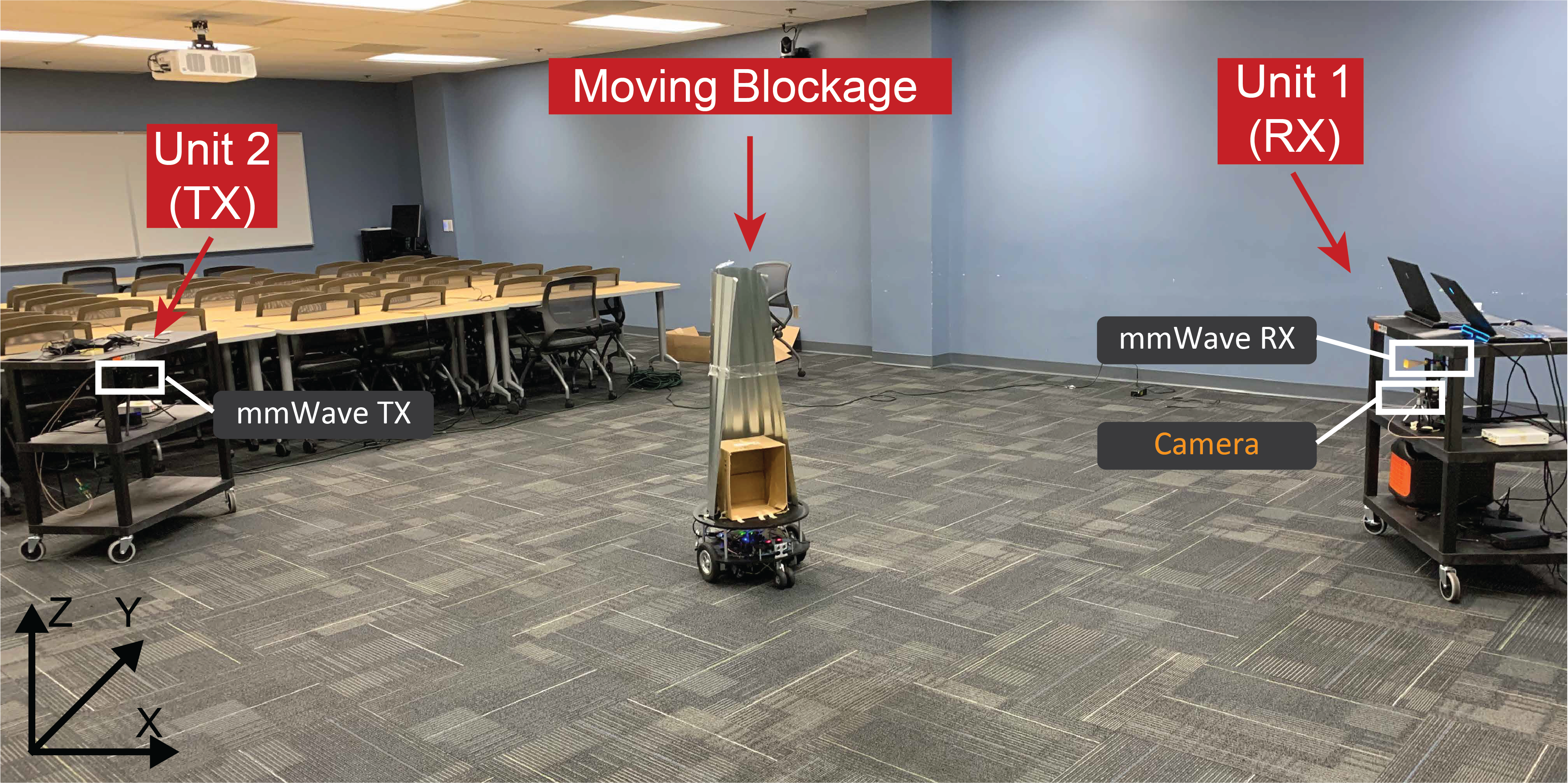

This testbed has two stationary units:

Unit 1 (a 60 GHz mmWave receiver with a horn antenna and an RGB camera) and

Unit 2 (60 GHz mmWave omni-directional transmitter)

Hardware Elements at Unit 1

RGB Camera

69° x 42° (H x V) FoV – 30 FPS – Rolling shutter – 2MP sensor resolution

Output: RGB images of dimensions 1920 x 1080

mmWave receiver

60GHz – 10° beamwidth (20 dBi) horn antenna

Hardware Elements at Unit 2

mmWave Transmitter

60GHz – Omni transmission (always oriented towards the receiver) – 20MHz bandwidth – 64 subcarriers

This testbed has two units: Unit 1 (a stationary basestation unit with radar, LiDAR, camera, GPS sensors and a mmWave phased array receiver) and Unit 2 (a stationary user with a GPS receiver and mmWave omni-directional transmitter)

Hardware Elements at Unit 1

RGB Camera

110° FoV – 30 FPS – Wide Angle – f/1.8 Aperture

Output: RGB images of dimensions 960 x 540

mmWave receiver

60GHz phased array – 16-element ULA – 64-beam codebook

You can check and plot the exact (measured) beam patterns using this link:

Output: 64-element vector with the receiver power at every beam

Hardware Elements at Unit 2

mmWave Transmitter

60GHz – Omni transmission (always oriented towards the receiver)

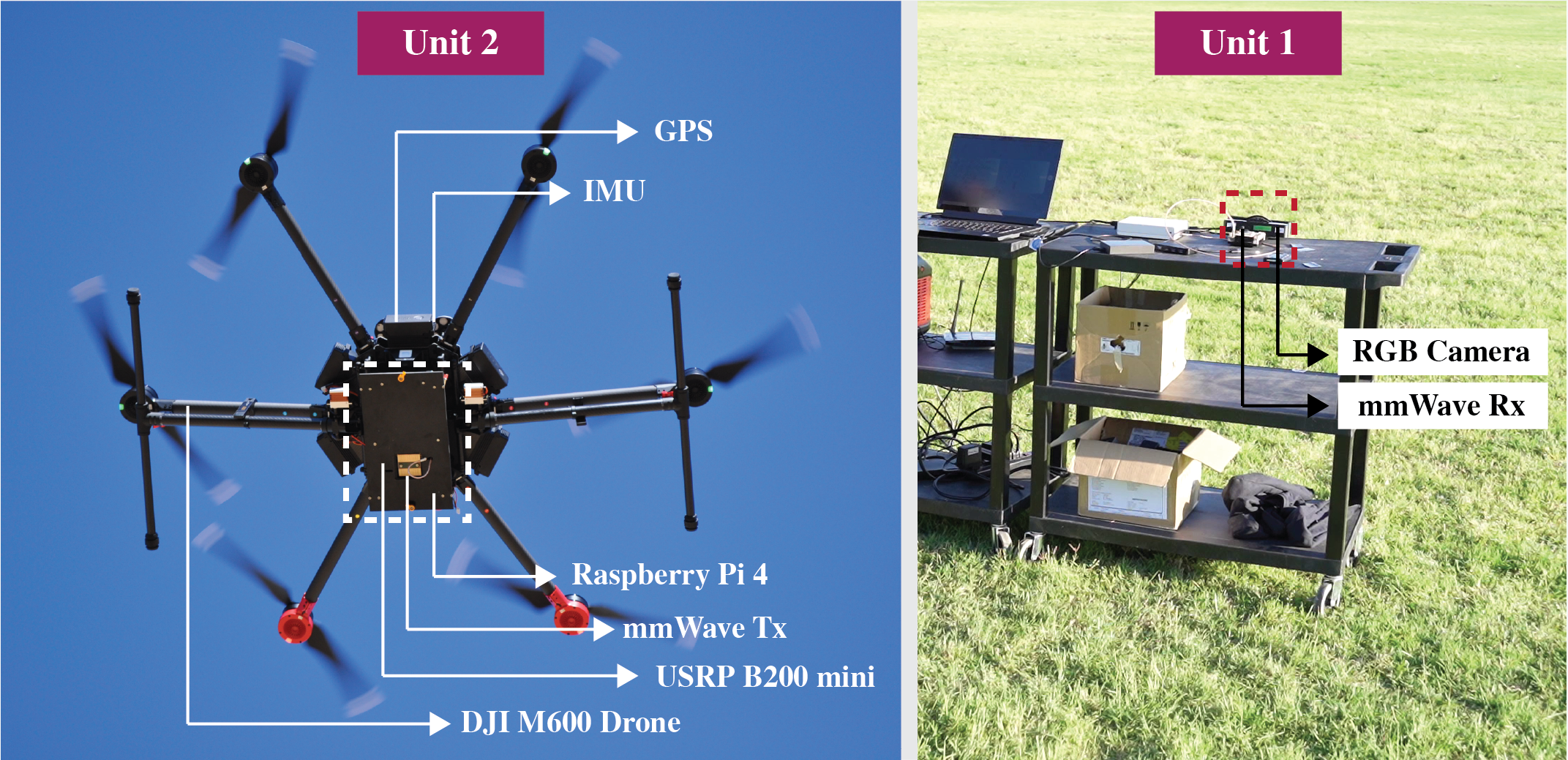

This testbed emulates a drone to infrastructure communication scenario and has two units: Unit 1 (a stationary basestation unit with camera, GPS sensors and a mmWave phased array receiver) and Unit 2 (a mobile drone with a GPS receiver, IMU, and mmWave omni-directional transmitter)

Hardware Elements at Unit 1

RGB Camera

110° FoV – 30 FPS – Wide Angle – f/1.8 Aperture

Output: RGB images of dimensions 960 x 540

mmWave receiver

60GHz phased array – 16-element ULA – 64-beam codebook

You can check and plot the exact (measured) beam patterns using this link:

Output: 64-element vector with the receiver power at every beam

Hardware Elements at Unit 2

mmWave Transmitter

60GHz – Omni transmission (always oriented towards the receiver)

GPS: A3 Flight Controller

Hovering accuracy: Horizontal ~ 1.5m and Vertical ~ 0.5m – D-RTK GNSS: Horizontal ~ 0.01m and Vertical ~ 0.02m

output: Latitude and Longitude, among other values

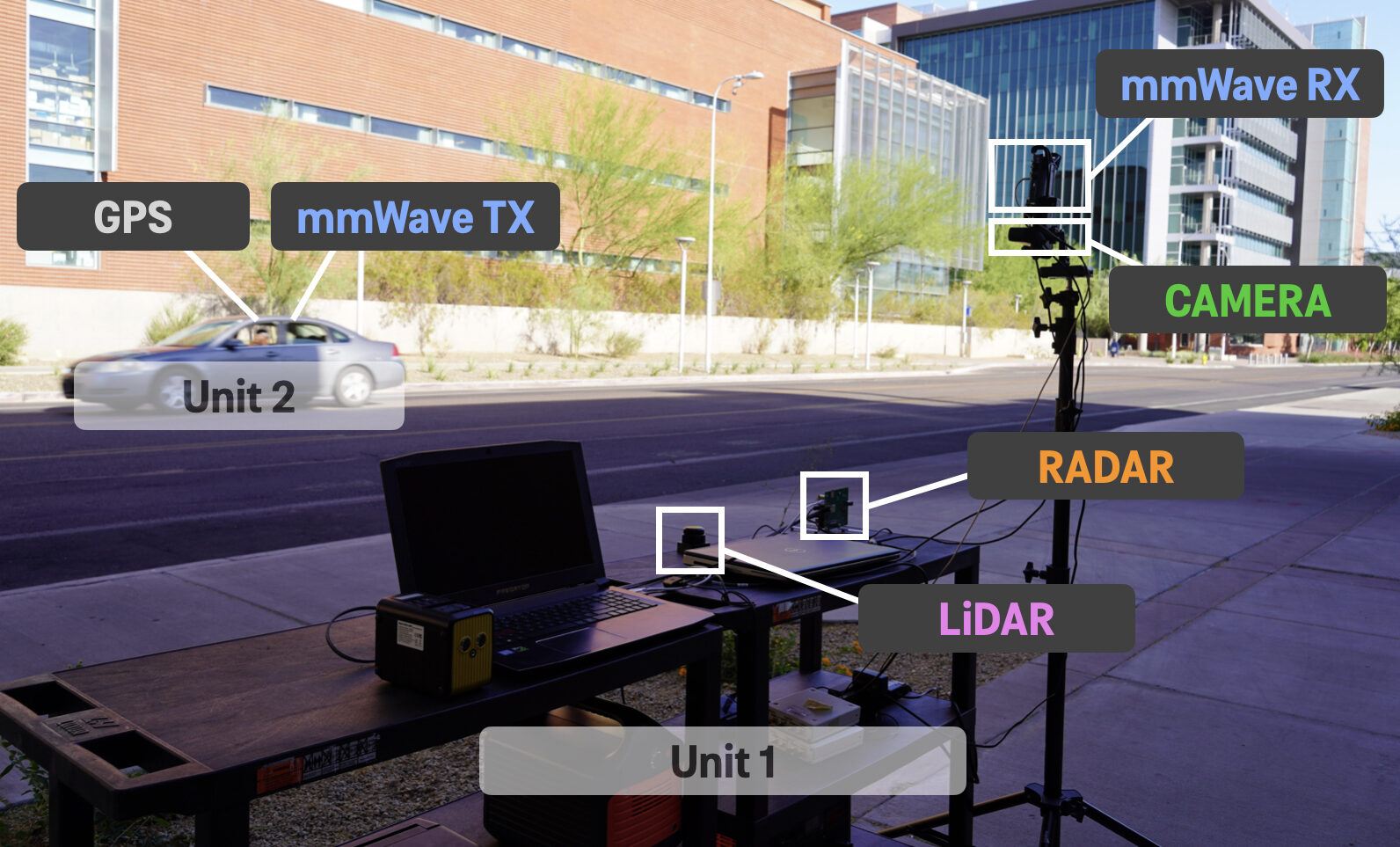

This testbed emulates a vehicle to infrastructure communication scenario with two units: Unit 1 (a stationary basestation unit with radar, LiDAR, camera, GPS sensors and a mmWave phased array receiver) and Unit 2 (a vehicle with GPS receiver and mmWave omni-directional transmitter)

This testbed emulates a vehicle to infrastructure communication scenario with two units: Unit 1 (a stationary basestation unit with radar, 3D LiDAR, camera, GPS sensors and a mmWave phased array receiver) and Unit 2 (a vehicle with GPS receiver and mmWave omni-directional transmitter)

Hardware Elements at Unit 1

RGB Camera

1100 FoV – 30 FPS – Wide Angle – f/1.8 Aperture

Output: RGB images of dimensions 960 x 540

mmWave receiver

60GHz phased array – 16-element ULA – 64-beam codebook

You can check and plot the exact (measured) beam patterns using this link:

Output: 64-element vector with the receiver power at every beam

Radar

FMCW radar with 3 Tx & 4 Rx antennas – fully-digital reception– 10 FPS – frequency range 76- to 81 GHz with 4 GHz bandwidth – Max. range ~ 100 m – Range resolution of 60 cm

Output: 3D complex I/Q radar measurements of (number of Rx antenna) x (samples per chirp) x (chirps per frame) : 4x256x250

3D LiDAR

Resolution: 32 Channels (Vertical) and 1024 (Horizontal) – Range: 120 m – FPS: 10/20 – FoV : 45° (±22.5º) Vertical and 360° Horizontal – Accuracy ~ 5 cm – Wavelength: 865 nm

Output: 360-degree point cloud sampling data

Hardware Elements at Unit 2

GPS-RTK with GNSS Multi-Band L1/L2 Surveying Antenna

10 measurements per second – Horizontal positional accuracy ~ 2.5m without RTK fix and ~ 10cm with RTK fix

output: Latitude and Longitude, among other values

mmWave Transmitter

60GHz – Omni transmission (always oriented towards the receiver)

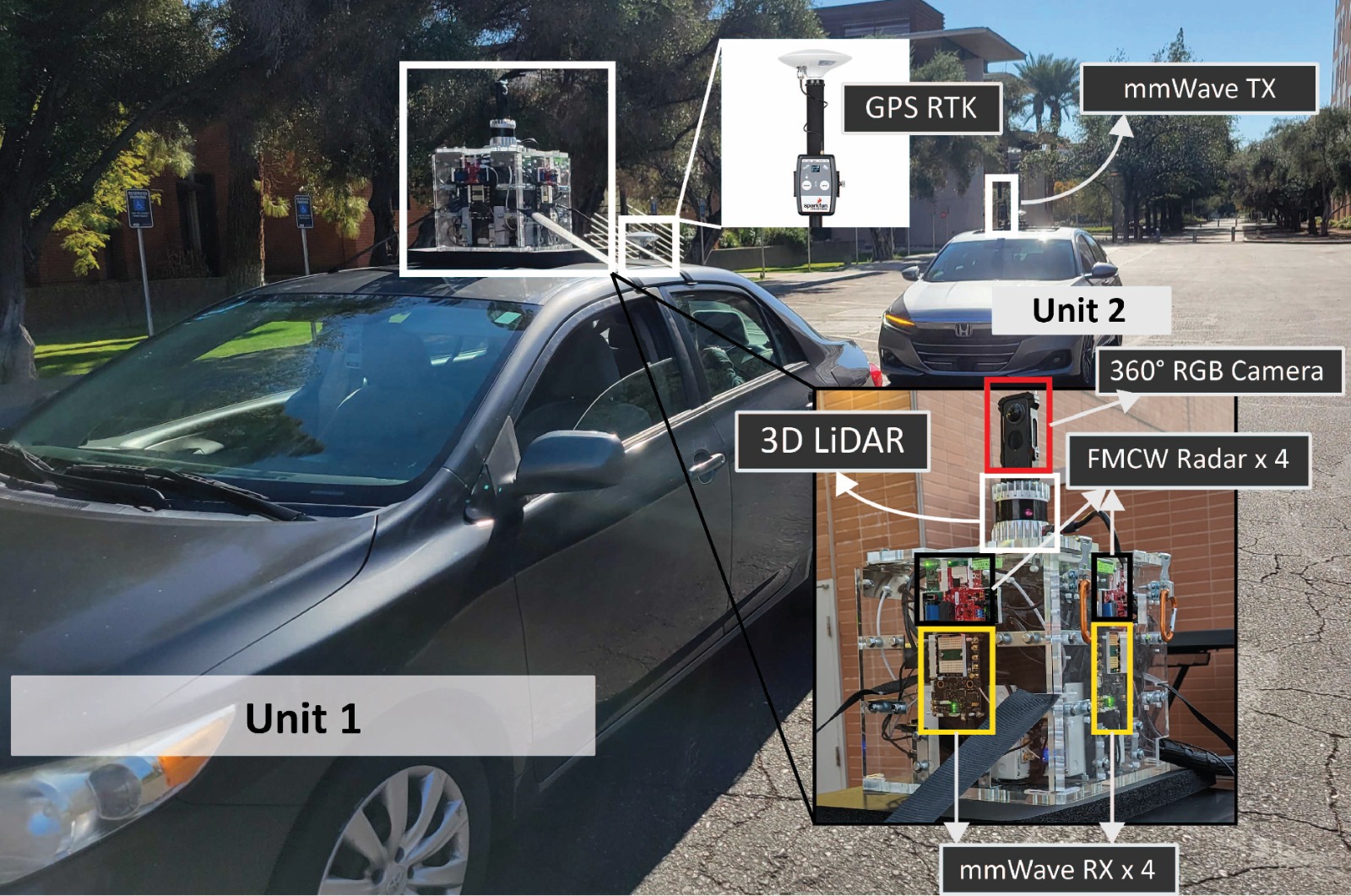

This testbed emulates a vehicle-to-vehicle (V2V) communication scenario with two units: Unit 1 (a mobile unit (vehicle) with four radars, LiDAR, 360-camera, GPS sensors, and four mmWave phased array receiver) and Unit 2 (a vehicle with GPS receiver and mmWave omnidirectional transmitter)

Hardware Elements at Unit 1

GPS-RTK

10 measurements per second – Horizontal positional accuracy ~0.5 m without RTK fix and ~ 1 cm with RTK fix

Output: Latitude and Longitude among other values

3600 RGB Camera

360º 5.7K resolution @ 30 FPS

Aperture: F2.0

Focal length (35mm equivalent): 7.2 mm

For all available specs, see the Insta360 one X2 spec page

Output: Individual images (with 900 and 1800 fields of view) rendered from a 3600 video

Radar

FMCW radar with 1 Tx & 4 Rx antennas – fully-digital reception– 10 FPS – frequency range 76- to 81 GHz with 4 GHz bandwidth – Max. range ~ 100 m – Range resolution of 60 cm

Output: 3D complex I/Q radar measurements of (number of Rx antenna) x (samples per chirp) x (chirps per frame) : 4x256x128 matrix per radar

3D LiDAR

Resolution: 32 Channels (Vertical) and 1024 (Horizontal) – Range: 120 m – FPS: 10/20 – FoV: 45° (±22.5º) Vertical and 360° Horizontal – Accuracy ~ 5 cm – Wavelength: 865 nm

Output: 3600 point cloud sampling data

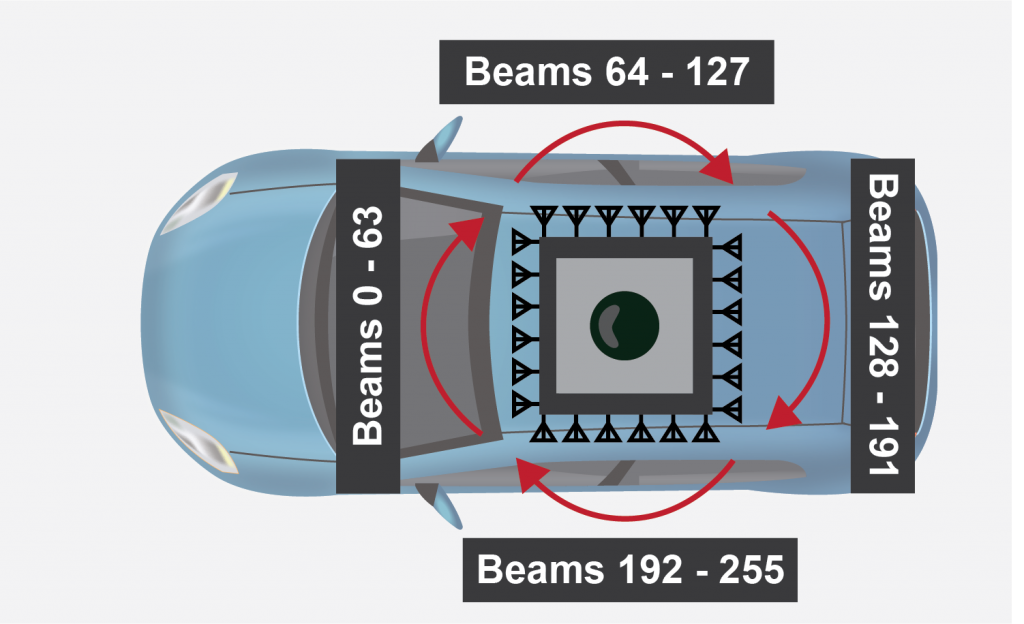

mmWave receiver (4x Phased array)

Each phased array is a 16-element ULA operating at 62.64 GHz center frequency using a pre-defined 64-beam codebook that sweeps from -45º to +45º in azimuth (approximately).

Follow the button below to download the beams and plotting the beam pattern of one phased array:

The phased arrays are placed on each side of the box. We index the data collected from phased arrays 1 to 4, respectively for the array pointing to the front of the car, to the right, to the back and to the left, as indicated in the image below.

Output: Each phased array measures the received power in each of the 64 beams. In total, unit 1 mmWave power measurements consist of four 64-element vectors.

Hardware Elements at Unit 2

GPS-RTK

10 measurements per second – Horizontal positional accuracy ~ 0.5 m without RTK fix and ~ 1 cm with RTK fix

output: Latitude and Longitude, among other

mmWave Transmitter

60GHz – Omnidirectional mmWave transmission

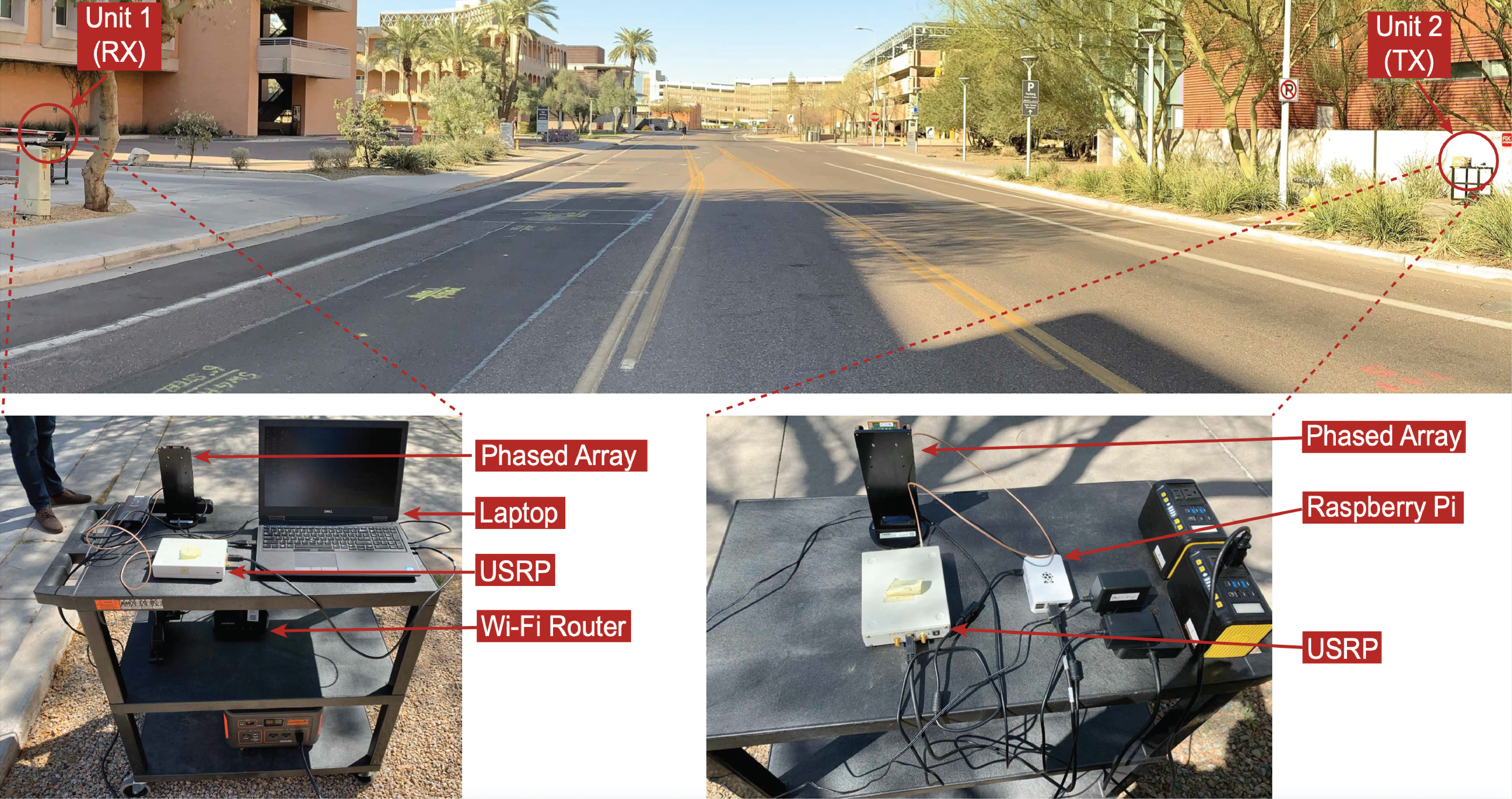

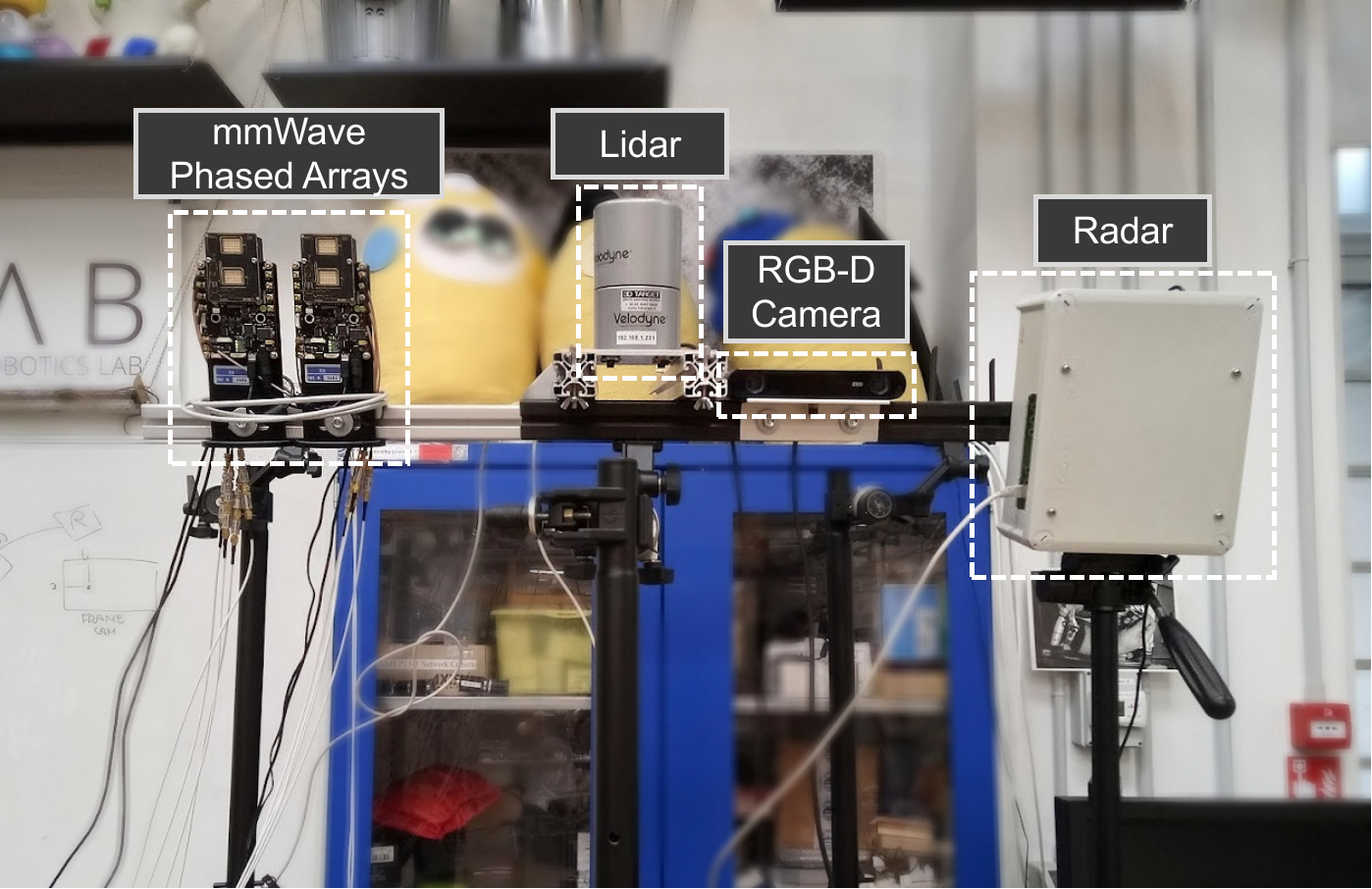

This testbed’s main goal is to compare ISAC sensing and communication waveforms with Multimodal sensing, namely radar, image and lidar sensing. It comprises a single unit, Unit 1 (static), which has one radar, one 3D LiDAR, an RGB camera, and two mmWave phased arrays (one transmitter and one receiver).

Hardware Elements at Unit 1

RGB-D Camera

2688 x 1520 pixels resolution @ 30 FPS – Aperture: F1.8 – Focal length: 2.1 mm – 120º Wide-ange FoV – For more information, see the ZED 2i specs.

Output: Individual RGB images (.jpg)

Radar

MIMO FMCW radar with 12 Tx and 16 Rx antennas in a cascaded architecture. All receive antennas receive simultaneously, but transmit antennas are used sequentially. Fully digital reception @10 FPS. Frequency range of 76 to 81 GHz with maximum 4 GHz bandwidth. Specific radar parameters vary across scenarios and should be consulted in the respective scenario pages (for example, Scenarios 42-44)

Output: two range-angle matrices (in the same .mat file), one with zero-Doppler and one with non-zero Doppler

3D LiDAR

Resolution: 32 Channels (Vertical) and 2250 (Horizontal) – Range: 100 m – FPS: 5-20 – FoV: ~40º (+10º to -30º) Vertical and 360° Horizontal – Accuracy ~ 2 cm – Wavelength: 903 nm

Output: 3D point cloud data (.pcd)

mmWave transmitter and receiver (2x Phased array)

Each phased array is a 16-element URA (2 vertical, 8 horizontal) operating at 60 GHz center frequency using a pre-defined 64-beam codebook that sweeps from -45º to +45º in azimuth (approximately).

Follow the button below to download the beams and plotting the beam pattern of one phased array:

As shown in the image, the arrays are side by side. One array transmits the ISAC signal in a given beam, and the other receives it in the same beam. This is done at a rate of 10 Hz. For a more detailed description of the ISAC subsystem, refer to this paper.

Output: Each phased array measures the received power in each of the 64 beams. In total, unit 1 mmWave power measurements consist of four 64-element vectors.

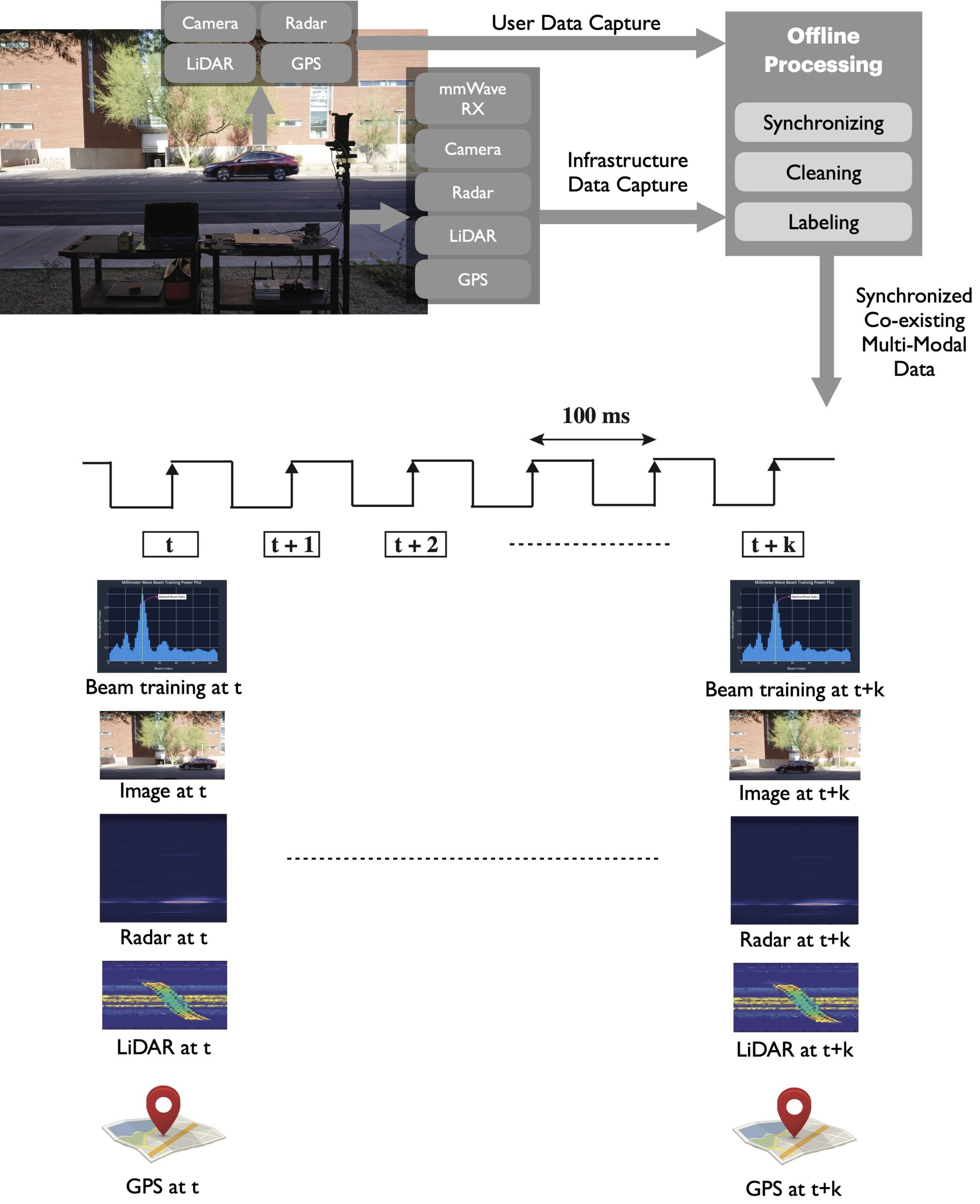

Data Collection Process

Scene Planning

The DeepSense 6G dataset consists of real-world multi-modal dataset collected from multiple locations in Arizona, USA (Tempe and Chandler). The primary aim of this dataset is to enable a wide range of applications in communications, sensing, and localization. In order to develop a diverse, challenging and realistic dataset to encourage the development of novel solutions for these varied applications, we adopt the following:

- Multiple locations: The 30+ scenarios in DeepSense 6G dataset were collected across more than 12 different indoor and outdoor locations with varying features such as busy streets in downtown with different vehicle speeds, streets with three-way intersection, parking lots to name a few. Each of the locations were carefully selected to capture challenging and realistic scenarios.

- Different communication scenarios: The dataset scenarios vary from vehicle-to-infrastructure communication, to drone communications, to communications with pedestrians, robots, and more, to cover the various aspects of beyond 5G communication use cases. The dataset scenarios/data also cover mmWave MIMO and sub-6GHz communication, and communication with Reconfigurable Intelligent Surfaces (RISs).

- Varying weather conditions: The weather conditions plays a critical role and can impact the performance of several sensing modalities such as vision, GPS and wireless data. For this, the data was collected during different weather conditions such as sunny, cloudy, windy and rainy with the temperatures ranging between 1o c to 48o c with the air humidity ranging between 5% to 97%.

- Different times of the day: To further increase the diversity of DeepSense 6G dataset, we collected data at different time of the day. From early morning to late-night, the scenarios of DeepSense 6G cover the entire spectrum.

DeepSense 6G not only consists of vehicular scenarios but also consists scenarios with humans and drones at the transmitter. Furthermore, to generate a realistic dataset, for the scenarios, we adopted different heights of the basestation and varying distance between the basestation and transmitter. For further details regarding the different scenarios, please refer here.

Sensor Alignment

As described in the DeepSense Testbed above, the different sensors such as the RGB camera, LiDAR and radar have varying FoV and range. To achieve a high quality multi-modal dataset with multiple sensors, it is essential to align these different sensors during the data collection process. We perform a number of calibrations steps prior to the collection of data to align the sensors taking into consideration the different range and FoV.

Sensor Data Synchronization

The different sensing elements such as the mmWave receiver, RGB camera, GPS RTK kit, LiDAR and radar have varying data capture rate. Note that the mmWave receiver runs at 8-9 Hz, camera captures data at 30 Hz while the GPS RTK kit runs at 10 Hz. Therefore, in order to achieve meaningful data, cross-modality data alignment is necessary. We undertake a series of actions in order to achieve the necessary alignment. For example, the exposure of the camera is triggered right after the beam sweeping is completed. Since the camera’s exposure time is nearly instantaneous, this method generally yields good data alignment between the camera and the mmWave receiver. Further, the timestamp of the image capture and the GPS data is stored. The timestamp helps in aligning the image, mmWave and GPS data during the post-processing. Similar steps are performed for the other modalities to ensure coherent data for each time step.

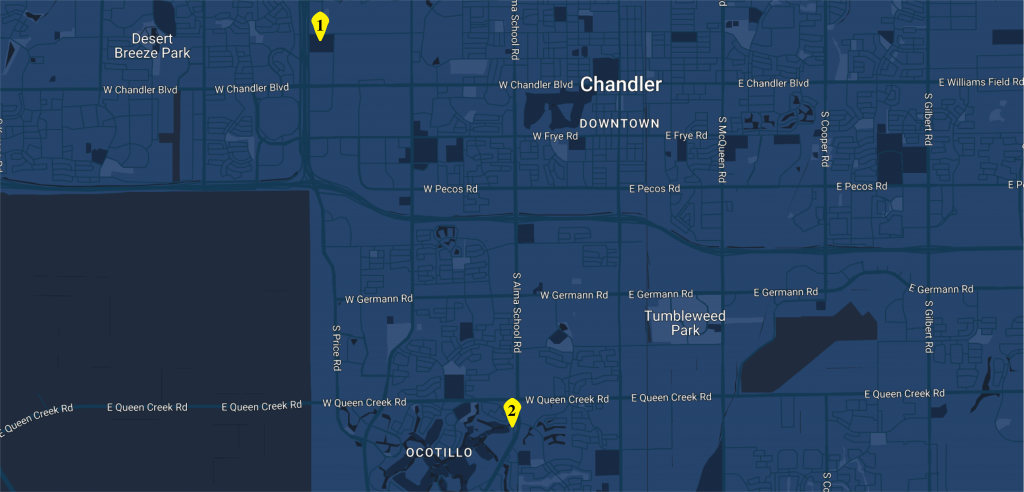

Data Collection Map

The scenarios in DeepSense 6G dataset was collected across different locations in Arizona, USA. In this section, we provide the data collection map highlighting the different location utilized for the data collection.

Tempe, Arizona, USA

DeepSense 6G: Tempe

1. McAllister Ave.

2. ECEE Dept., ASU

3. BioDesign ASU

4. Lot-59

5. University Drive

6. Rural Rd.

7. College Ave.

Chandler, Arizona, USA

DeepSense 6G: Chandler

1. Thude Park

2. Alma School Road