Position-Aided Beam Prediction

Leaderboard

| Date | Name | scenario1 | scenario2 | scenario3 | scenario4 | scenario5 | scenario6 | scenario7 | scenario8 | scenario9 |

|---|---|---|---|---|---|---|---|---|---|---|

| 1/15/2021 | Wireless Intelligence Lab ASU | Top-1: 55.59% Top-2: 81.01% Top-3: 90.70% | Top-1: 49.04% Top-2: 70.91% Top-3: 84.82% | Top-1: 32.45% Top-2: 47.45% Top-3: 59.13% | Top-1: 28.37% Top-2: 43.69% Top-3: 56.36% | Top-1: 41.17% Top-2: 64.00% Top-3: 77.91% | Top-1: 41.64% Top-2: 67.27% Top-3: 82.08% | Top-1: 27.33% Top-2: 46.92% Top-3: 58.37% | Top-1: 21.77% Top-2: 32.84% Top-3: 40.28% | Top-1: 38.82% Top-2: 54.22% Top-3: 64.82% |

- This table documents the different proposed position-aided beam prediction solutions and provides a way for benchmarking.

- For the individual DeepSense scenarios (development datasets), we use the “Top-k” beam prediction accuracy as the evaluation metric.

- For further details and information regarding the ML challenge and how to participate, please check the “ML Challenge” section below.

License

A. Alkhateeb, G. Charan, T. Osman, A. Hredzak, J. Morais, U. Demirhan, and N. Srinivas, “DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Datasets,” IEEE Communications Magazine, 2023.

@Article{DeepSense,

author={Alkhateeb, Ahmed and Charan, Gouranga and Osman, Tawfik and Hredzak, Andrew and Morais, Joao and Demirhan, Umut and Srinivas, Nikhil},

title={DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Dataset},

journal={IEEE Communications Magazine},

year={2023},

publisher={IEEE}}

J. Morais, A. Behboodi, H. Pezeshki, and A. Alkhateeb, “Position-aided Beam Prediction in the Real World: How useful GPS positions actually are?,” in submission to IEEE GLOBECOM, 2022

@Article{JoaoPosition,

author = {Morais, J. and Behboodi, A. and Pezeshki, H. and Alkhateeb, A.},

title = {Position Aided Beam Prediction in the Real World: How Useful GPS Locations Actually Are?},

publisher = {arXiv},

doi = {10.48550/ARXIV.2205.09054},

url = {https://arxiv.org/abs/2205.09054},

year = {2022},}

Introduction

Beam selection is a challenge: Millimeter-wave (mmWave) and sub-terahertz (sub-THz) communications are key pillars for current and future wireless communication networks. The large available bandwidth at the high frequency bands enables these systems to satisfy the increasing data rate demands of the emerging applications in autonomous driving, edge computing, mixed-reality, etc. These systems, however, require deploying large antenna arrays at the transmitters and/or receivers and using narrow beams to guarantee sufficient receiver power. Finding the best beams (out of a codebook) at the transmitter and receiver is associated with high beam training overhead (search time to find/align the best beams), which makes it hard for these systems to support highly-mobile and latency-sensitive applications.

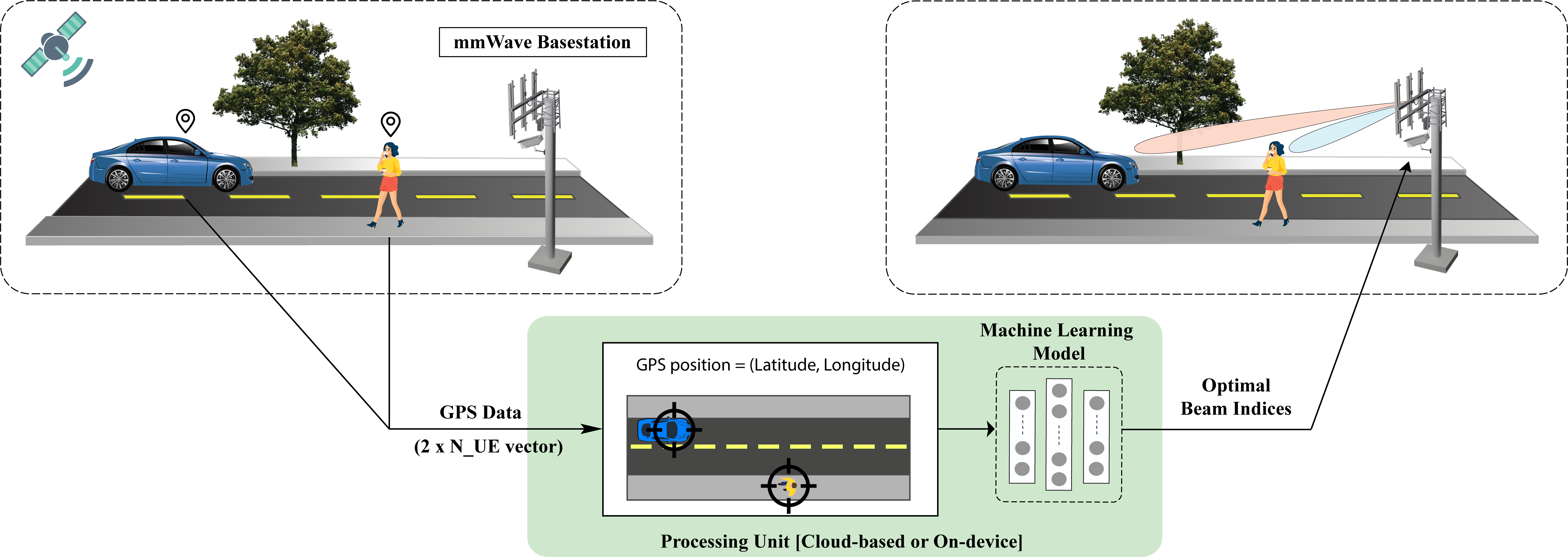

Sensing aided beam prediction is a promising solution: The dependence of mmWave/sub-THz communication systems on the line-of-sight links between the transmitter/receiver means that the awareness about their locations and the surrounding environment (geometry of the buildings, moving scatterers, etc.) could potentially help the beam selection process. For example, the sensory data collected by RGB cameras, LiDARs, Radars, GPS receivers, etc., can enable the transmitter/receive decide on where to point their beams (or at least narrow down the candidate beam steering directions). We call this approach sensing-aided beam prediction/selection. Position-aided beam prediction is a special case when the basestation attempts to leverage position data about the user, in the shape of GPS reports, to predict the optimal beam indices to the user.

Position-aided beam prediction: Specific Task Description

Position-aided beam prediction at the infrastructure is the task of predicting the optimal beam indices from a pre-defined codebook by utilizing a machine learning model and the positions of the user equipment (UE) captured by the integrated GPS.

Objective of the ML Task: Given a GPS position of the wireless environment captured (by the UE) at any time step t, the primary objective of this task is to design a machine learning model that predicts the optimal beam index. From the wireless communication perspective, the machine learning model aims to return the index of the beam (from a pre-defined codebook) that maximizes the received signal-to-noise ratio (SNR). In general, the machine learning model is expected to return the ordered set of ‘K’ most likely beams , i.e., the top-K beams. For ML model development, we provide a labeled dataset consisting of GPS positions (input to the ML model) and the ground-truth beam indices (labels). More details regarding the dataset is provided in the Dataset section below.

For further information regarding how position can aid the beam prediction task, please refer to our paper.

Task-Specific Dataset

DeepSense 6G: Developing efficient solutions for sensing-aided beam prediction and accurately evaluating them requires the availability of a large-scale real-world dataset. With this motivation, we built the DeepSense 6G dataset, the first large-scale real-world multi-modal dataset with co-existing communication and sensing data.

In this position-aided beam prediction task, we build development/challenge datasets based on the DeepSense data from scenarios [1 – 7].

For each scenario, we provide the following datasets:

- Development Dataset: It comprises the GPS data and the corresponding 64×1 power vectors obtained by performing beam training with a 64-beam codebook at the receiver (with omni-transmission at the transmitter). The GPS data consists of 2×1 position vectors, respectively, denoting latitude and longitude, in decimal geographical coordinates, e.g. (33.4234234º, -111. 344324º). The dataset also provides the optimal beam indices as the ground-truth labels.

- Challenge Dataset: To motivate the development of efficient ML models, we propose a benchmark challenge. For this, we provide a Challenge dataset, consisting of only the input GPS positions. The ground-truth labels are hidden from the users by design to promote a fair benchmarking process. To participate in this Challenge, check the ML Challenge section below for further details.

Below we explain how to access the development dataset.

Please login to download the DeepSense datasets

How to Access Task Data?

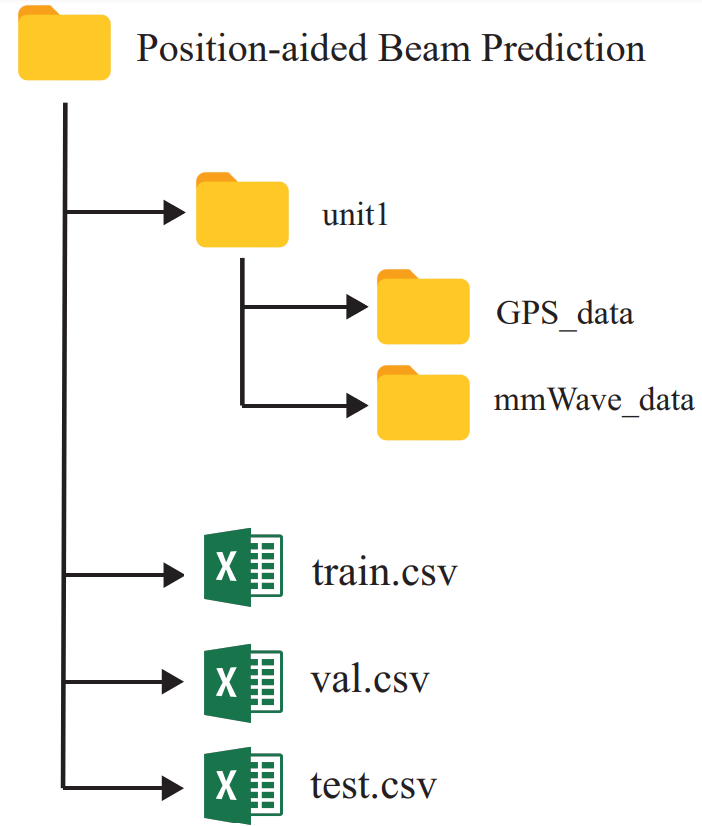

Step 1. Download All Scenarios Dataset

Step 2. Extract the VABT.zip file. Contains the scenario dataset folders

Each scenario folder consists of following files:

- unit1: Includes the GPS data and corresponding beams

- train.csv

- val.csv

- test.csv

What does each CSV file contain?

For each data collection sampling time (~100ms), we provide the corresponding visual and wireless data. Further, we compute the ground-truth beam indices from the power vectors and provide them under the “beam_index” column. An example of 5 data samples in shown below.

Individual Download Links

To download the individual scenarios, follow the scenario-wise links provided below.

Please login to download the DeepSense datasets

ML Challenge: Position-Aided Beam Prediction

To advance the state-of-the-art in the position-aided beam prediction task, we propose a benchmark challenge based on the DeepSense real-world dataset. The objective of the task is to develop a machine learning-based model that takes in the GPS positions captured at the UE and predicts the optimal beam indices for that mobile user.

This challenge adopts the labeled development dataset described above with GPS positions and the corresponding optimal beams.

Participation Steps

Step 1. Getting started: First, we recommend the following:

- Get familiarized with the data collection testbed and the different sensor modalities presented here

- Next, in the Tutorials page, we have provided Python-based codes to load and visualize the different data modalities

Step 4. Submission: After you develop your ML model, you are invited to submit your results at submission@DeepSense6G.net. Please find the submission process and evaluation criteria below.

Submission Process

We define a standardized beam prediction result format that serves as an input to our evaluation code. There are 5 different real-world DeepSense 6G scenarios in this challenge. For each scenario, please submit the following:

- The users must submit the Top-3 predicted beams for the Challenge set. An sample submission csv file is shown below.

- Every submission should provide their pre-trained models, evaluation code and ReadMe file documenting the requirements to run the code.ple

| sample_index | top-3 beams |

|---|---|

| 1 | [3,5,7] |

| 2 | [21,23,24] |

| 3 | [62,59,61] |

| 4 | [49,50,37] |

| 5 | [12, 14, 15] |

Evaluation

- The evaluation metric adopted in this challenge is the top-k beam prediction accuracy.

- The top-k accuracy is defined as the percentage of test samples whose ground-truth beam index lies in the predicted beam of top-k scores.

- The evaluation is done based on the ML challenge (hidden) test set, which is used for benchmarking purposes.

Leaderboard Rules

- In order to be ranked in the Leaderboard above, please submit your results following the submission process above

- Further, to be ranked in the Leaderboard table, contestants need to submit the challenge set results for all the 5 scenarios.