Vision-Aided Blockage Prediction

Task Overview

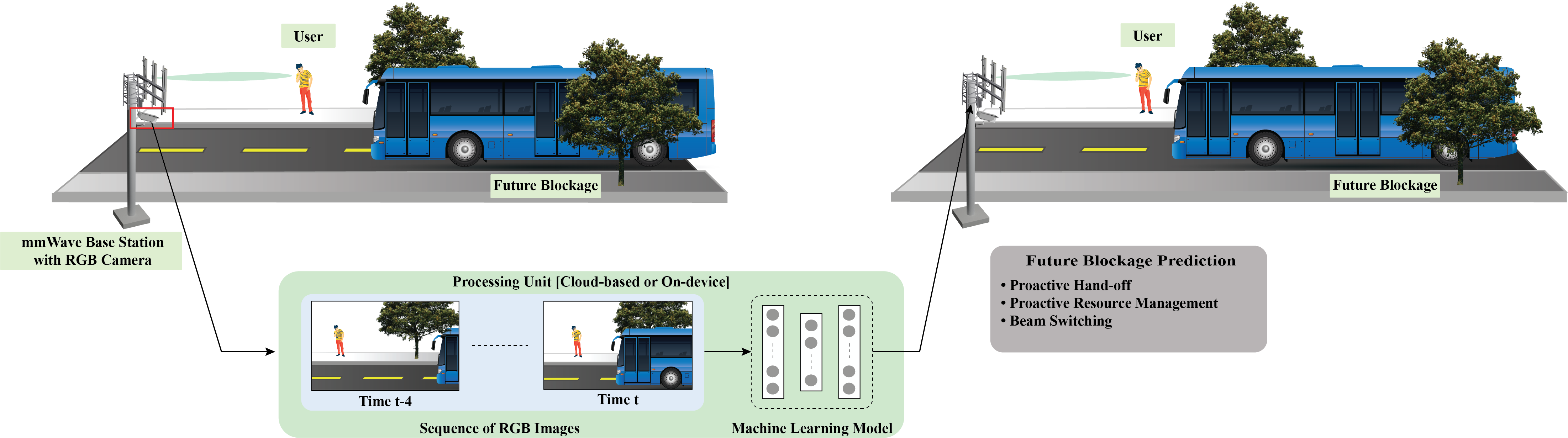

Vision-Aided Blockage Prediction

Millimeter-wave (mmWave) and sub-terahertz communications are becoming the dominant directions for modern and future wireless networks. With their large bandwidth, they have the ability to satisfy the high data rate demands of several applications such as wireless Virtual/Augmented Reality (VR/AR) and autonomous driving. Communication in these bands, however, faces several challenges at both the physical and network layers. One of the key challenges stems from the sensitivity of mmWave and terahertz signal propagation to blockages. This requires these systems to rely heavily on maintaining line-of-sight (LOS) connections between the base stations and users. The possibility of blocking these LOS links by stationary or dynamic blockages can highly affect the reliability and latency of mmWave/terahertz systems. The key to overcoming the link blockage challenges lies in enabling the wireless system to develop a sense of its surrounding. This could be accomplished using machine learning. Many recently published studies have shown that using wireless sensory data (e.g., channels, received power, etc.), a machine learning model can efficiently differentiate LOS and Non-LOS (NLOS) links. Recently, vision-aided blockage prediction has been proposed to provide an alternative and efficient solution to the current conventional beam training approach.

Vision-aided blockage prediction is the task of predicting the optimal beam indices from a pre-defined codebook by utilizing a machine learning model and the images of the wireless environment captured by the camera installed at the basestation. In the figure below, a mmWave basestation is deployed at the side of the street, serving the two users present in the FoV of the basestation. Further, the basestation is equipped with an RGB camera capable of capturing the wireless environment and providing real-time feedback of the surroundings to the basestation. Instead of the conventional beam sweeping, the basestation can utilize the RGB images and leverage the advancements in machine learning (more specifically computer vision) to predict the optimal beam indices from a pre-defined codebook, thereby overcoming the challenges associated with the large beam training overhead.

Leaderboard

ML Challenge: Introduction

To advance the state-of-the-art in the vision-aided blockage prediction problem, we propose a benchmark challenge to measure the performance on our dataset. The objective of the task is to develop a machine learning-based model that takes sequence of images captured at the basestation as the input and predicts whether the LOS link will get blocked in the future. For this, we provide a labeled dataset of RGB images and their link blockage status.

Getting Started

In order to participate in the challenge, one should first get familiarized with the data collection testbed and different sensor modalities presented here. Next, in the Tutorials page, we have provided Python-based codes to load and visualize the different data modalities. These information should help in getting ready for the next part, i.e., training the machine learning models for vision-aided beam prediction.

Dataset

The dataset utilized for the vision-aided beam prediction task is comprised of a selection of the DeepSense 6G scenarios. In particular, scenarios 5 – 9 are adopted for this task. The different scenarios adopted in this task consists of data collected at different locations and different time of the data. This was done to ensure a diverse and realistic dataset. This diversity is further enhanced by adopting different unit 1 (basestation) heights and varying distances between the basestation and the mobile transmitter (unit 2). For further details, please refer to the respective scenario pages. Here, we provide the download link for the individual scenarios. The dataset consists of the training, validation, test, and challenge set. Each of the train, validation, and test set consists of the RGB images and their corresponding ground-truth beam indices. For the challenge set, we only provide the RGB images.

We also provide an additional option to download all the scenarios together in the link below.

Data Visualization

We provide a sample image from each of the scenarios adopted in this ML challenge. For further details regarding the scenarios, please follow the link provided in the images.

ML Challenge: Rules

General Rules

- We release the sensor (image) data for the training, validation, and the test set for all the 5 DeepSense 6G scenarios adopted in this challenge.

- The competition uses a challenge (hidden) test set for benchmarking purposes. Users make predictions on the test set and submit the results to our evaluation server. After the submission meets all the criteria for the leaderboard listed below, the results will be displayed on the leaderboard above.

Submission Rules

- We define a standardized beam prediction result format that serves as an input to our evaluation code. There are 5 different real-world scenarios in this challenge. For the challenge (hidden) test set in each scenario, the users must organize the evaluation results into a csv file. An sample submission csv file is shown below.

- Every submission should also provide their pre-trained models, evaluation code and ReadMe file documenting the requirements to run the code.

Leaderboard Rules

- In order to be ranked in the Leaderboard above, the users need to meet the submission rules mentioned above.

- Furthermore, since in this challenge, we have 5 different scenarios, submissions will only be ranked if the users submit the challenge set results for all the 5 scenarios.

Evaluation Metric

- The evaluation metric adopted in this challenge is the top-k accuracy. The top-k accuracy is defined as the percentage of test samples whose ground-truth beam index lies in the predicted beam of top-k scores.